How do companies analyze what an ordinary Joe should be wearing?

Data Engineering from the lens of a Software Engineer

Of course, they use AI... right??

Well, yes and no.

Sure, AI and Machine Learning (ML) are big parts of it, but they rely on data—lots of data (Big Data, anyone?)—to train model and be analyzed.

Humans like Data Analysts and Scientists spot trends in the market, then tweak strategies to make sure the recommendation systems are on point.

But it’s Data engineering and engineers that makes the magic happen. Huge Respect!

But hey, I’m a software engineer, so why should I care about data engineering? 🧐

Think twice, you might be wrong!

Today, I'll give software engineers a quick intro to data engineering, covering:

Why It Matters: Why learning data engineering basics is helpful, even if you’re not aiming to become a data engineer.

Role Breakdown: A simple example to explain the roles of software engineers, data engineers, analysts, and scientists, and how they work together.

Tech Overview: A snapshot of data engineering platforms, tech stacks, and processing workflows.

👋 Hey there, I am Gourav. I write about Engineering, Productivity, Thought Leadership, and the Mysteries of the mind!

Tech Comprehension #1

Welcome to the latest edition type of The Curious Soul’s Corner! Every month, I’ll drop a tech story that helps you get how systems work. Don’t just gather knowledge—build understanding!

Why should software engineers care about data engineering?

As a junior engineer, terms like "Big Data," "Spark," and "Data Warehouse" would fly right over my head.

I used to think, "That’s for the data engineers. I’m busy building distributed systems, so why should I bother?"

Being a senior engineer, I realized knowing data pipelines, batch-processing, and data analysis influences my system’s design.

So, I rolled up my sleeves and dove into a project and got hands-on experience with MapReduce, and Spark—just enough to get by.

Then, I got pulled into other business-critical projects and that little spark (pun intended) of interest in data engineering fizzled out.

As a staff engineer now, there’s no more room for excuses. I need to understand enough to help the company make tradeoff decisions.

In general, software engineers benefit by just knowing the basics:

Better System Design & Architecture: Knowing data pipelines will help you understand their trade-offs. You'll build more scalable, efficient systems.

Enhanced Debugging: Ever had a bug that’s tied to data? Understanding data will make you a better problem-solver when those tricky issues pop up.

Data-Informed Decision Making: As you grow into leadership roles, understanding data engineering will help you. It will give you more insight into product, system, and business decisions.

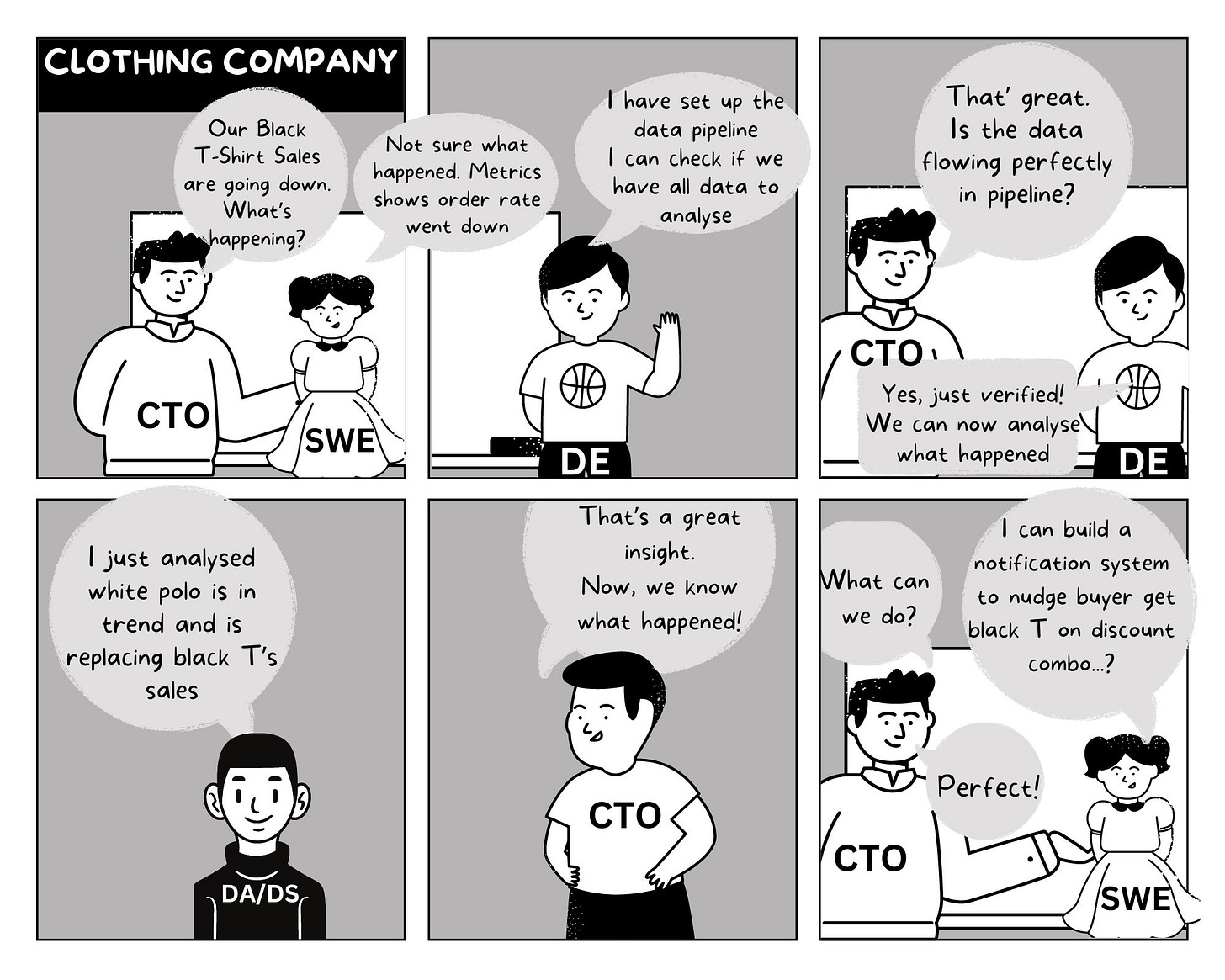

The Difference Between Software Engineers, Data Engineers, Data Analysts, and Data Scientists

Here’s the breakdown:

Data Engineers build data pipelines—systems that transform raw data into something useful for analysis.

Data Analysts take that clean, processed data and use it to find patterns and trends, helping companies figure out what’s happening now.

Data Scientists go a step further by building models that predict future trends and offer recommendations.

Software Engineers build the systems that solve the problems those data insights reveal.

In short, the data engineer sets up the infrastructure. The data analyst finds current trends. The data scientist makes future predictions. The software engineer builds the product that uses them. 🚀

Data Engineering Tech Stack: A Primer for Software Engineers

Oh man! The field’s come a long way, and it’s still changing fast!

Concepts like Data Lakes and Lakehouses and tools like Trino, Flink, data formats like Apache Iceberg, Avro, and Parquet.

More confusing than ever!

I’ll try to explain simply and cover only the Tech Stack of Data Engineering.

Tech Stack of Data Engineering

The image below is a courtesy of

. Thanks to him for creating this.As a software engineer, you're likely familiar with the request lifecycle in an app. It starts with an API call (ingestion), runs some business logic (processing), then saves it to a database (storage). This is what we call Online Transaction Processing (OLTP), where the system handles day-to-day operations.

Data engineering works similarly but picks up after your application’s database. Just as APIs bring in data for apps, data engineers create pipelines. They process and store data on a much larger scale for analytics. This is called Online Analytical Processing (OLAP).

The Basics: ETL vs. ELT

Data engineering revolves around one key idea: building systems that ingest, process, and store data in ways that make it useful for analysis. This process is called building a data pipeline, and the most common architecture for this is ETL:

Extract: You grab raw data from various sources like APIs, databases, or files.

Transform: You clean and shape the data into a usable format.

Load: You store it somewhere (like a data warehouse) where analysts and business tools can easily access it.

There’s also ELT, which is like ETL’s cooler cousin. Instead of transforming the data right away, you load it into a storage system first and then transform it as needed. This is especially useful when you don’t know upfront what the data might be used for. It keeps your options open and flexible.

Data Warehouses, Lakes, and Lakehouses

Where does all this data go? Great question!

Data Warehouse: This is structured storage for quick analysis. It’s fast and optimized for answering specific questions.

Data Lake: This is a giant storage pool for raw data. It’s great for when you have tons of different data but aren’t sure yet what you want to do with it.

Lakehouse: A happy blend of the two. It offers the flexibility of a data lake with the structured query power of a data warehouse.

The evolution from warehouses to lakes to lakehouses happened because businesses needed faster, more scalable ways to handle growing amounts of data.

Big Data: The Heavyweights

Big Data is all about handling massive amounts of information, the kind that Amazon, Netflix, and Instagram deal with every day. Traditional systems can’t handle this scale, so we use distributed systems like Hadoop.

Hadoop is an ecosystem that chops up huge datasets and processes them across multiple servers at once, kind of like an army of worker bees.

Here’s the core of Hadoop:

HDFS (Hadoop Distributed File System): Stores huge amounts of data.

MapReduce: Breaks down data tasks and processes them in parallel.

YARN: Keeps everything running smoothly.

Other cool tools you should know about:

Apache Spark: A super fast engine for processing big data in near real-time.

Apache Kafka: A messaging system for streaming data.

Apache Flink: A tool that handles both real-time and batch processing.

These tools are like the superheroes of Big Data, ensuring massive amounts of data can be processed and analyzed efficiently.

File Formats: Keeping Data Organized

When managing massive amounts of data, the format you choose can make or break performance and flexibility. Let’s break down some popular data formats you’ll see in data warehouses and lakehouses:

Iceberg & Delta (Lakehouse Formats)

Apache Iceberg is the table format for huge, distributed datasets. It supports file formats like Parquet and ORC, and cool features like time travel (yes, you can query old data!) and dynamic partitions. Works great with Spark, Trino, and Flink.

Delta Lake (by Databricks) is a fast, real-time data pro, optimized for Spark. It supports Parquet, gives you schema control, and speeds up read-heavy tasks like machine learning.

JSON & Parquet (Warehouse Formats)

JSON is the human-friendly, semi-structured format perfect for web apps, but not the fastest for big data crunching.

Parquet is the warehouse star! A columnar format is perfect for large datasets. It's efficient for analytics and supported by all the big data platforms.

There’s a lot about this data format that we can detail out but that’s beyond the scope of a basic primer. I may cover this in a future edition of Tech Comprehension.

Wanna build a strong Data Engineering understanding and make a career in it?

If you want to build a solid understanding of Data Engineering, try out

’s bootcamp here. Use coupon code “GOURAV” and you will get 25% off. :)Shoutouts 🔊

A big shout-out to all the Data Engineering folks I know

, , , . You all are rockstars!Also, special shoutout to other Tech newsletter writers -

, , - writing tech deep dive newsletter is hard.Interesting reads last week:

SWE v/s MLE by

and5 Keys to the Hiring Manager Interview from a Meta Senior Manager by

andBeing an engineering manager at Amazon by

andI Worked More and Achieved Less by

🤝 Let’s Connect

Sponsorship | Collaboration | LinkedIn | 1:1 Mentoring | Twitter

Gourav Khanijoe

This couldn’t have popped up at a better time. I’m trying to push for greater collaboration between departments in my org, with my latest venture being with our Data Engineering team.

I had a rough idea what they did and I hold some archaic knowledge of data pipelines, but this article has really given me a decent insight into the field. Thanks!

Wow, this is the most technical article I've seen from you, Gourav but I liked it! I learned a good amount about data engineering differences--something I don't get a lot.

Also appreciate the shout-out on the article collab with Stefan on keys to the hiring manager interview 🙏